The IMPACT team joined colleagues from U Twente at the AI in Education Hackathon from November 8.-10. We prototyped a dashboard for AI driven feedback on the multimodal and didactical quality of tutorials, online lectures and explanatory videos.

Fig. 1: Our presentation at the Design Lab (U Twente)

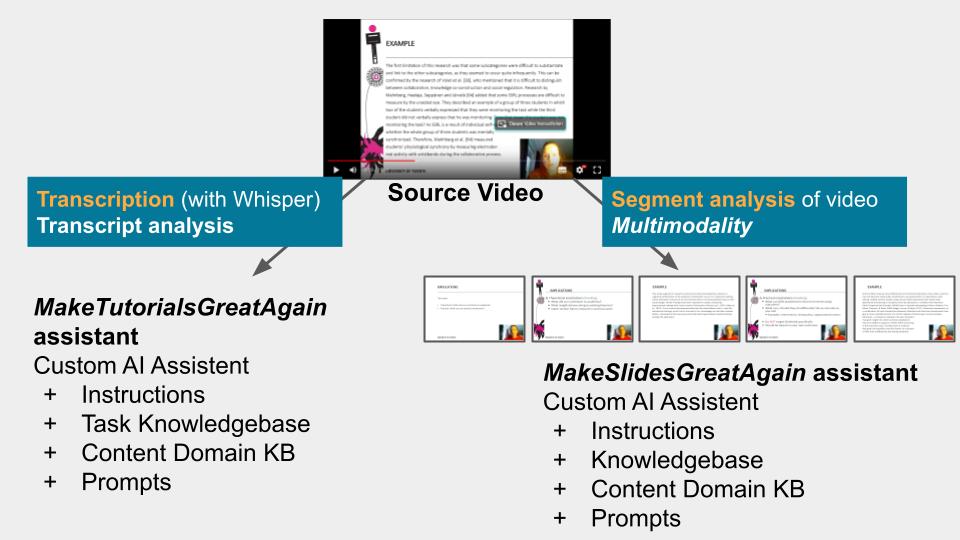

In my sub team together with Jan and Fatima we first created, tested and optimize domain-specific AI Assistants based on the gpt-4-1106-preview model (both prompt engineering as well as knowledge base curation). We then prototyped an automated flow pipeline for downloading videos, transcribing the audio (with the Whisper model) as well as extracting the slides or other form of visualization from the videos (using FFMPEG), then feeding it into our custom AI Assistants. We did a log of interactive prompt engineering in ChatGPT-4 and LLaVa for the slide analysis.

Fig. 2: Basic multimodal analysis pipeline

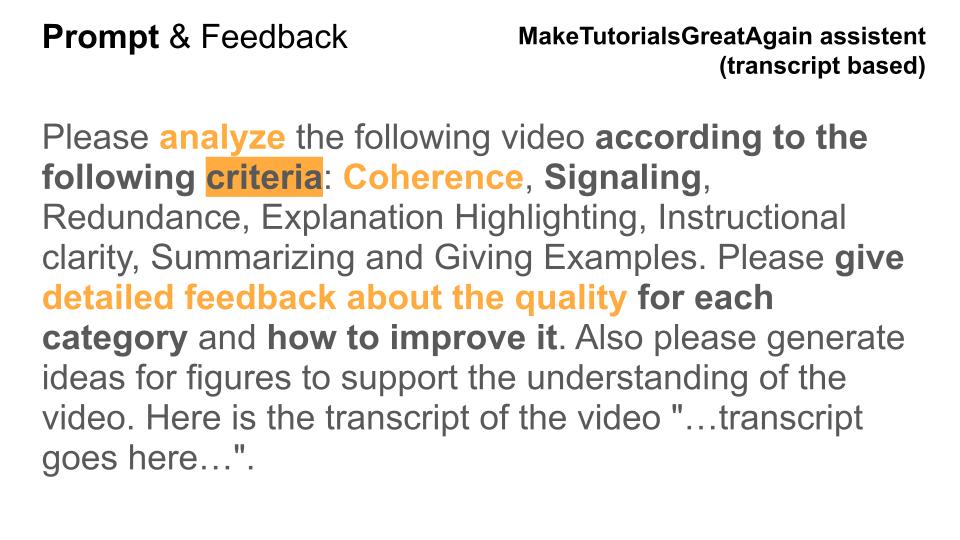

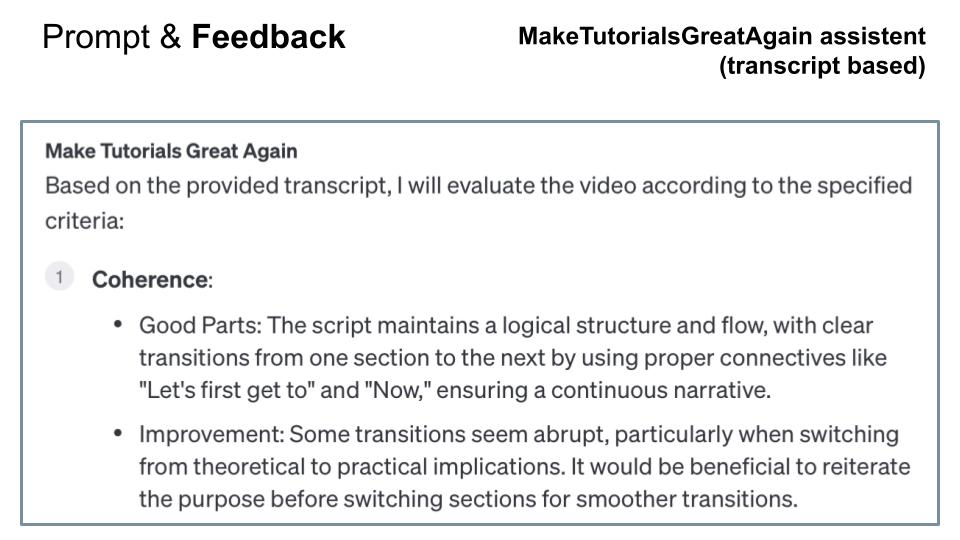

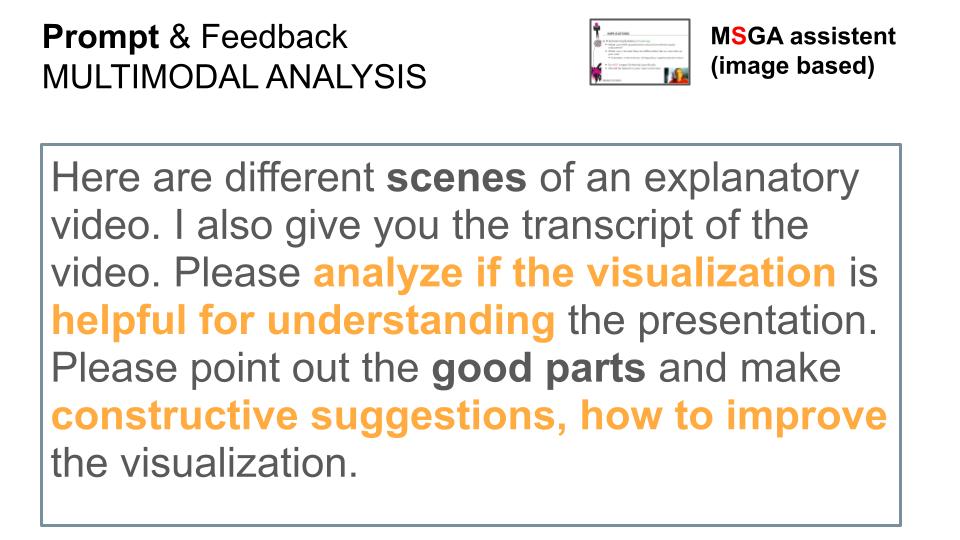

Let me show you our implementation output, which was quite good:

Fig. 3: Prompting on Transcripts

Fig. 4: Feedback on transcripts from AI model

Fig. 6: Feedback from AI on Slides

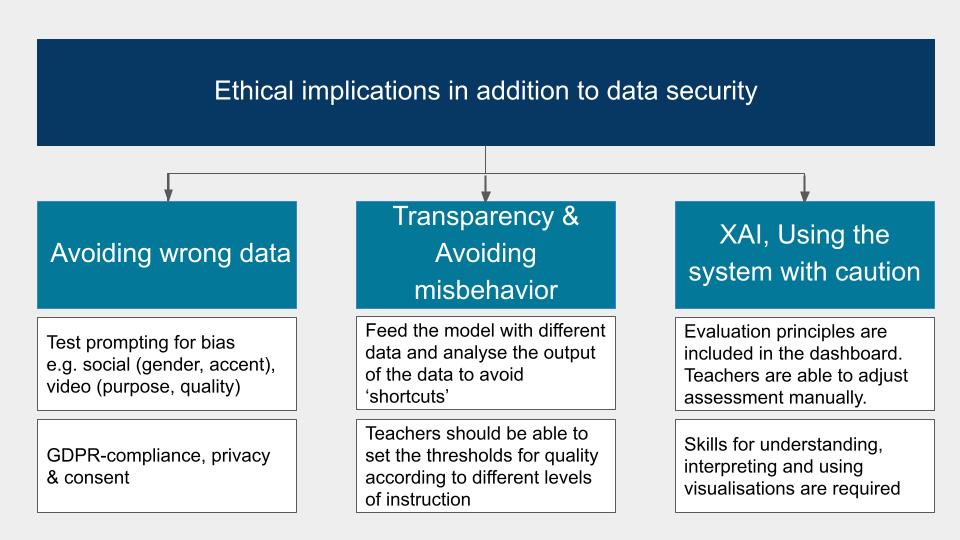

So this is very much work in progress, but we are in the progress of writing up the basic architecture in a current article which will be published by the end of the year, and we will do much more research and development to systematically test such an approach for doing multimodal formative assessment analytics. Needless to say, there are a lot of ethical and methodological problems to be considered (see figure 7).

Fig. 7: Ethical implications – discussion slide

While we used mainly OpenAI tools for this prototype, we want to run open models for doing the analysis on our own servers. These two days have been great and we’ve learned a lot. Thanks to U Twente for hosting the event.

Fig. 8: After doing 2 days of hard work Jan & Fatima are ready to present our project

Good bye U Twente

Ein Gedanke zu „AI Based Analysis of Explanatory Videos and Tutorials: Hackathon AI in Education at U Twente“